Virtual Devices

A Virtual Device encompasses one or more flash memory dies, providing the user the ability to utilize the hardware isolation of separate dies. Dies are not shared across separate virtual devices. I/O operations on one Virtual Device will not compete for die time with other virtual devices. There may be a minimal amount of latency caused by contention between virtual devices due to any internal controller bottlenecks or flash memory channel conflicts for virtual devices that share flash memory channels.

When a virtual device is created, several parameters are specified to define the characteristics of the virtual device. Virtual devices are created by using the SEFCreateVirtualDevice() function. The size of the virtual device is user-configurable and dependent on the resources available. When a new virtual device is created, it must be given a unique ID.

Because virtual devices represent hardware isolation, the SEF Unit will not wear level across the dies in different virtual devices. It is expected that virtual devices will be created when a SEF Unit is first set up and their geometry not subsequently altered. Deleting and creating new virtual devices is supported but may mix dies with different amounts of wear. In this case, wear leveling becomes the application’s responsibility.

Creation-time Parameters

virtualDeviceID: an identifier that will later be used to specify the created virtual device. This identifier must be unique across the entire SEF device. The maximum allowed ID is one less than the number of dies in the SEF Unit.

dieMap: Requests a rectangular region of dies that will be owned by the created virtual device.

defectStrategy: Specifies how defective ADUs are handled by the virtual device. The choices are Perfect, Packed or Fragmented. The Perfect strategy hides defective ADUs through overprovisioning and mapping. Capacity is reserved, and ADUs are remapped to provide static and consistent flash memory addresses with contiguous ADU offsets. Packed also hides defective ADUs presenting consistent flash memory addresses with contiguous ADU offsets, but the size of super blocks will shrink as the device wears. With the Fragmented strategy, the client is exposed to the device’s defect management. ADU offsets are non-contiguous, and super blocks will shrink in size as the device wears. Refer to Chapter 11 for more details.

numFMQueues: Specifies the number of Flash Media Queues per die. The maximum value is firmware-specific and can be retrieved with SEFGetInformation().

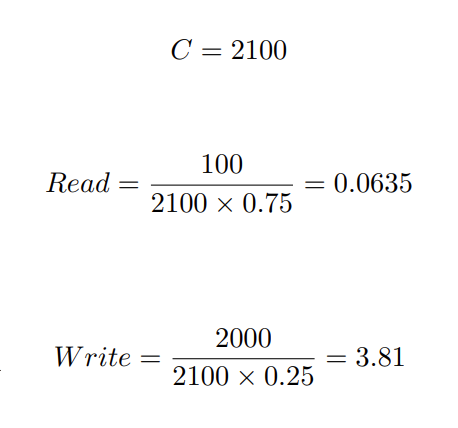

weights: Specifies the default weights for each Flash Media Queue. There is a default weight for each type of operation: read, erase for write, write, read for copy, erase for copy and write for copy. The weights affect which queue will supply the next die operation. If all the weights are the same, the queues are round-robined. If all the weights are 0, the queue number is used as a priority with queue 0 being the highest priority. Otherwise, weighted fair queuing is used and the queue with the lowest current die time is selected. When an I/O completes, the weighting for the I/O is added to the die time for the queue. The minimum die time from all the queues is then subtracted from all the queues. Additionally, when a read operation arrives at the head of a queue with a die time less than a currently executing write or erase, that operation is automatically suspended to execute the read. When choosing different command weights for weighted fair queuing, it is necessary to know the actual die time for each command and the percent of die time that is desired for each command. Command die time can be found in the SEFInfo structure returned by SEFGetInformation(). In general, if there are n commands whose die times are ci with a desired percent pi of die time, the weights wi are then:

This will certainly yield fractional weights. To make them integers, they can be normalized by multiplying all the weights by a scaling factor (e.g., 100 and/or the inverse of the smallest weight). For example, if you wish reads to use 75% of the bandwidth and writes to use 25% with hypothetically reads taking 100us and writes taking 2000us.

Normalizing by dividing by 0.0635 yields a read weight of 1 and a write weight of 60.